In this article I will explain how the size of Bitcoin blocks is determined in the absence of an arbitrary size limit. Oleg has already written an excellent post on this topic, but I have a few things to add and I wish to relate the discussion to the current debate over increasing the block size.

If the maximum limit on block size is removed, it is not the case that blocks will simply grow without limit. There is a natural size that is determined by the market. The block size will change until the marginal revenue of adding more transactions approaches the marginal cost; this could happen because the block grows in size until a limit is reached, or because the costs of mining and transmitting blocks change as a result of investment in the capital goods which make up the network, or a combination of the two.

The marginal revenue of a transaction is easy to understand—it is simply the value of the transaction fee relative to the size of the transaction. The marginal cost comes from two sources—the risk of generating an orphaned block and the bandwidth required to receive the transactions. Right now, blocks propagate at different speeds depending on how big they are. This puts a sharp economic limit on their size because it means that each additional transaction in a block increases its chances of being orphaned. If two blocks are generated at roughly the same time, the smaller one will propagate faster and therefore has a greater probability of being accepted throughout the network. This risk limits the block size.

However, there is a proposal from Gavin Andresen which would reduce the block propagation speed from O(n) to O(1) using invertable Bloom lookup tables. Should this be implemented, the risk of an orphaned block will no longer limit the block size because large blocks will propagate at the same speed as smaller ones. Instead, the bandwidth of the network limits the size of the blocks. This, by the way, is a reason that O(1) block propagation needs to be implemented as quickly as possible—when that happens, the miners will demand to start generating larger blocks.

What determines the bandwidth of the network? Right now the Bitcoin network has a problem because nobody pays for relaying transactions other than the people running full nodes. Ultimately this will have to change because if it does not, there is the possibility that required functions in the network would not be served properly. What we should want is that the network should set transaction fees and should adjust to relay only those transactions which would be profitable for miners to include in blocks. In turn, miners should be willing to pay to receive transactions which they can mine, and ordinary bitcoin users should be willing to pay to receive transactions which are likely to be included in the latest blocks. Maybe this will one day be accomplished with Bitcoin’s micropayment channels.

This is all related to the debate about increasing the maximum block size because there is a myth, which stems from a poor understanding of the economics of block size, that increasing the maximum block size would reduce Bitcoin’s security. For example, on Bitcoin-Assets, Mircea Popescu has argued

so average bandwidth in US (where Gavin is based) is 10Mbit. An incearse of .5 per year is like what 15 next then 22.5 after that etc etc < << in 20 years the per-block subsidy will be just about 40 bitcents. at that same time, gavin's block size will be 110 mb. So one block will fit 100x as many tx, and each solved block will yield 50x less in subsidies. that's a 5k drop over 20 years. it's high enough to kill the price, and with it mining, and with all that bring bitcoin back within the financial ability of the us, which is exactly the point of all this fucking derpage.

In light of the foregoing, it should be clear that Mircea, not Gavin, is derping here. First, increasing the maximum block size does not imply that there will be an increased network bandwidth. Increasing the block size only provides the option, not the necessity. Miners will tend to allow for more transactions if it is profitable to do so, and they will never make blocks so big that they would be unlikely to propagate throughout the network.

Second, and more importantly, Mircea is thinking only in terms of an increased cost of a higher bandwidth rather than its effect on the revenue of mining or the opportunity cost of not allowing the block size to increase. The block reward is still declining regardless of what happens to the block size. Therefore, Bitcoin’s security must be backed by a new source of revenue eventually. Bitcoin cannot pay miners forever with new bitcoins or else it would have no value today. That means transaction fees and the mass adoption of Bitcoin. If Bitcoin cannot handle a high transaction volume, then it is fundamentally flawed.

It is true that an artificial limit on the block size would limit the cost of running a full node, but it would also limit the revenue available from mining. It is not necessarily the case, therefore, that allowing for an increased network bandwidth would lead to a reduced hash rate, long-term, from what it would have been otherwise. If the block size were limited, then not only would the number of transactions be limited, but each one would have to be very expensive in order to pay for a high hash rate. Bitcoin could not function easily as a medium of exchange under those circumstances, and it clearly has much better prospects if it can process many transactions with low fees. In the long-term, it is only through mass adoption and lots of transactions that Bitcoin could possibly have a high difficulty in the long run.

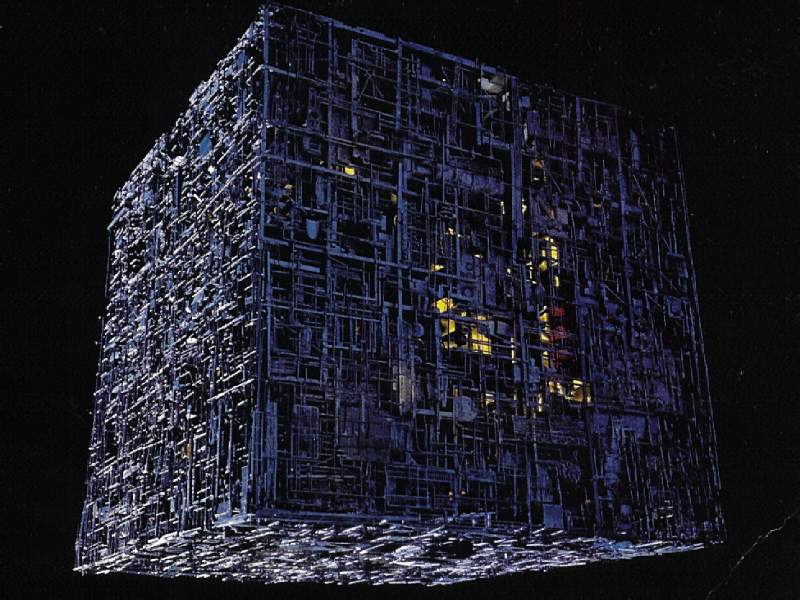

Image source: http://www.voyager.wz.cz/